Tools

To supplement and apply our research, we develop and maintain a variety of software toolkits. Each tackles a different facet of data-efficient machine learning, including data subset selection for efficiently training deep neural networks, targeted selection for improving performance on desired data slices, active learning for label-efficient data procurement, and more. Below are some of the toolkits that we maintain, which is done jointly as part of the DECILE team.

Featured

CORDS

CORDSA COReset and Data Selection library for making machine learning time-, energy-, cost-, and compute-efficient. Using state-of-the-art subset selection techniques, CORDS leverages the deep learning framework provided by PyTorch to greatly reduce training time, energy demand, and other resource requirements demanded by deep learning while not sacrificing model performance.

DISTIL

DISTILA Deep, dIverSified inTeractIve Learning library for performing active learning in deep learning. Using PyTorch, DISTIL implements many state-of-the-art active learning strategies to help reduce labeling costs when procuring new data. By selecting only the most informative unlabeled data, DISTIL is able to reduce the required labeling cost by a factor of 2x to 5x.

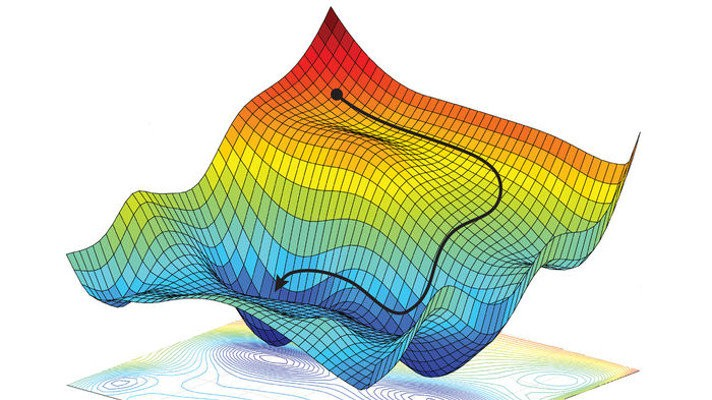

Jensen

JensenA C++ toolkit with API support for convex optimization. Jensen implements many convex optimization algorithms across a range of different kinds of convex losses that are commonly used across different kinds of regressors and classifiers. Including convex optimization algorithms such as gradient descent, stochastic gradient descent, L-BFGS, and more, Jensen allows for training and deploying models with only a few lines of code while easily supporting future convex optimization algorithms within its framework.

SPEAR

SPEARA SemisuPervisEd dAta pRogramming library using Python. Implementing several revent data programming approaches, SPEAR provides its users with the capability to programmatically label and build training datasets using weak supervision. By combining subset selection techniques, data programming priciples, and semi-supervised learning, SPEAR provides state-of-the-art capacity in its space.

SubModLib

SubModLibAn efficient, scalable submodular optimization library for many common choices of submodular functions and submodular optimization techniques. SubModLib provides a Python interface for its users while implementing its core functionality in C++ to combine Python’s ease of use with C++’s efficiency. By providing a modularized approach to submodular optimization, SubModLib makes it easy to solve a wide breadth of problems, notably including many of such present within data-efficient machine learning. SubModLib is used frequently in our other toolkits.

TRUST

TRUSTA TaRgeted sUbSet selecTion library for procuring data slices of interest to improve model performance and to personalize your models. Leveraging PyTorch, TRUST provides a number of efficient implementations of algorithms used in targeted subset selection. TRUST is capable of filtering out undesired out-of-distribution data, ignoring redundancies in the data, and mining rare instances in the data. All such methods easily places the capability to personalize models within the hands of its users, allowing for optimal performance in a given domain via targeted subset selection.